Introduction

You've just had a brilliant idea for an app and have decided to get to work on it. You decide to start developing as a team. Each team member uses a different machine with a different operating system, and you all work remotely. You choose to deploy the application to various environments, such as testing, production, QA, and staging.

To collaborate with others on the same project, you will probably need:

A version control system.

Set up an environment and install dependencies.

Replicate the same setup across multiple systems.

Collaborate with others on the same project.

Things can get pretty complicated, that's why you need a tool such as Docker to satisfy the aforementioned conditions.

This article will help you gain a practical understanding of Docker's most commonly used features. A solid grasp of these concepts will help you avoid common mistakes and use Docker more efficiently.

Containerization

Containerization is the process of packaging an application and its dependencies (such as system libraries, config files, etc.) into a single unit known as a container.

The main benefit of containerizing your applications is that you can run them on any supported host.

Containers

Containers are small packages that include the application code for your software services as well as any dependencies, such as particular versions of the libraries and runtimes needed to support those languages.

A container is an independent program that runs in a hosted environment. This makes them relatively lightweight in comparison to virtual machines; and easy to create, modify and run.

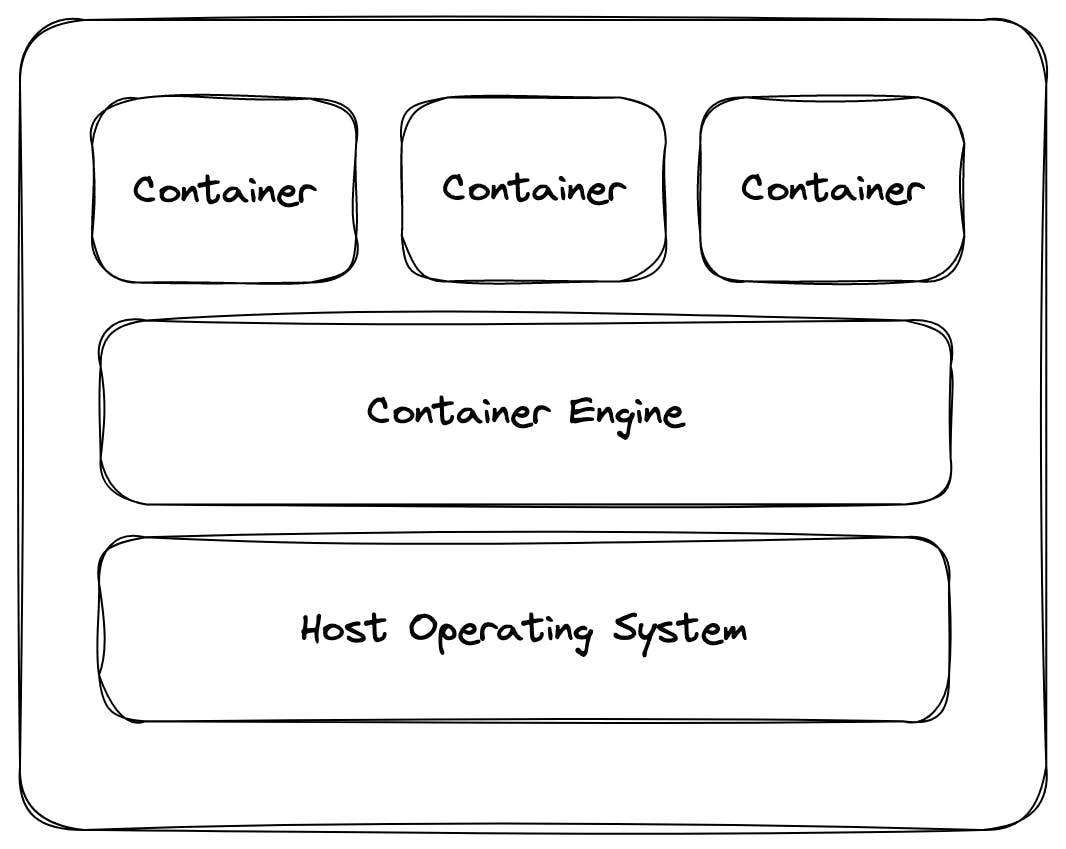

Containers share system resources such as storage, CPU, memory, and network via an abstraction provided by a container engine.

A single host can run multiple containers because they run on isolated spaces on the host's operating system.

Why do we need containers?

Predictability

Docker ensures that runtime dependencies and application resources are consistent across containers.

Imagine working on a distributed team where each developer uses a different operating system to run an application. The predictability of containers helps prevent a prevalent list of problems, such as:

Resolve the issue of incompatibility of dependencies from host to host.

The ability to reproduce a container's state across different environments.

Isolation

Containerization allows the complete isolation of containers running on the same host.

Isolation provides the following benefits:

By default, a container has no restrictions on the use of resources and is free to utilize as much of a given resource as the kernel scheduler of the host permits.

Separation of responsibility in large teams i.e devs would focus on business logic, OPs team would manage deployment.

**Refer to this section for more info regarding resource constraints in Docker.**

Portability

Docker allows you to easily create reproducible images to deploy your application with minimal manual effort and setup quickly.

You can share containers with others and pull official or custom images from a hosted repository. Several cloud services, such as GCP or AWS, offer registries to help deploy and manage containers on their platforms.

Security

By default, Docker gives each container an environment with different sets of namespaces and control groups, so they won't see each other's processes.

Each container also gets its own network stack, meaning that a container doesn’t get privileged access to the sockets or interfaces of another container. Containers can interact with each via a network interface.

Containers Under the Hood

Containers are nothing more than a combination of a few Linux kernel features at their core. This article will go through the main features.

Isolated Environments (Chroot'd)

The root directory of a new process can be specified using a Linux command known as chroot which is short for change root. It allows you to set the root directory in our container use case to be the location of the new container's root directory.

Containerisation allows you to utilize this feature to set the root directory of newly created containers.

Namespaces

It may not be enough to create isolated environments because each one may still have access to processes running on other containers or the host.

Docker utilizes namespaces to provide an additional layer of security, they allow you to encapsulate processes and prevent them from observing or interacting with processes running on other containers or the host.

Control Groups (Cgroups)

By default, a container has no restrictions on the use of resources and is free to utilize as much of a given resource as the kernel scheduler of the host permits.

Control groups allow resource accounting and limiting which gives you total control over how much memory, CPU, or storage a container can use.

Consider a system with 3 containers (A, B, and C), running on a host with a limited amount of computational resources. The host's memory may be used up by Container A, starving the other containers.

For instance, if the kernel determines that there is not enough memory to execute critical system functions, it may start killing programs to release memory. Any process has the ability to be terminated, including Docker and other essential programs. The whole system may theoretically come to an end if the incorrect process is terminated.

Docker

Docker is an open-source project that provides tools for building, running, and deploying containers and managing application deployments. Docker also provides an API for interacting with the images to create these containers. It helps deploy applications efficiently and securely in the cloud.

Images

Docker images act as instructions to build a Docker container, like a blueprint of a pre-made container. Images can be created manually or via Dockerfiles.

Dockerfile

The Dockerfile provides the instructions/ commands for creating a container image that runs your program and includes all the build dependencies your application needs.

FROM node:18-alpine # base image

CMD ["node", "-e", "console.log(\"Hello, World\")"] # execute command

The file is executed by Docker Build which is one of Docker engine's most used features. It executes commands sequentially.

Executing Images

If you have the Docker CLI installed, you could utilize the following commands to build and run images.

docker build:instructs the Docker engine to build an image.run run:instructs the Docker engine to run the container.

You can verify this by running the following command

docker ps

From

Defines a base for your image. In this instance, it's node-alpine.

CMD

Allows you to specify the default application that is launched when the container is started based on this image. There can only be one CMD per Dockerfile, and if there are more, only the last CMD instance is executed.

External Files and Resources

Let's create a simple TypeScript file that contains the following immediately invoked function expression.

(function helloWorld(): void {

console.log("Hello, World")

}());

FROM node:18-alpine # base image

COPY . . # copies files from the source and adds them to the container's filesystem.

CMD [ "node", "hello.js" ]

COPY vs. ADD

Both instructions can be used to copy files from a specific source on the host machine to a specific destination within the Docker image. However, ADD allows you to specify a URL as the source file, in which case it will download the file from the URL and add it to the image. Additionally, ADD can automatically open compressed files like zip and tar archives.

**It is generally recommended to use COPY unless you need the additional functionality provided by ADD.**

# Use ADD to download a file from a URL and unpack it

ADD http://<resource>.com/<file>.tgz /usr/src/myapp/

# Use COPY to copy a file from the host machine to the image

COPY <path>/<file>.txt /usr/src/myapp/

Tags

Docker tags are just an alias for an image ID. The tag's name must be an ASCII character string and may include lowercase and uppercase letters, digits, underscores, periods, and dashes. In addition, the tag names must not begin with a period or a dash; they can only contain 128 characters.

api:alpha.api:latest.

Layers

Docker images are built from layers. Each layer is a separate instruction for the Docker daemon, telling it exactly what to do when building the final image.

Multi-Stage Builds.

Docker allows you to use one container to build your app, and another to run it. This can be useful if you have significant dependencies to build your app, but you don't need those dependencies to run it.

# For dependencies

FROM node:12-stretch AS deps

USER node

WORKDIR /home/node/code

COPY --chown=node:node index.js .

CMD ["node", "index.js"]

# For building

FROM node:12-stretch AS builder

USER node

WORKDIR /home/node/code

COPY --chown=node:node index.js .

Docker Hub

Docker hub is a web service to find and share images. It's like GitHub but for containers.

Pushing Images

docker push [OPTIONS] NAME[:TAG|@DIGEST]

Pulling Images

docker pull [OPTIONS] NAME[:TAG|@DIGEST]

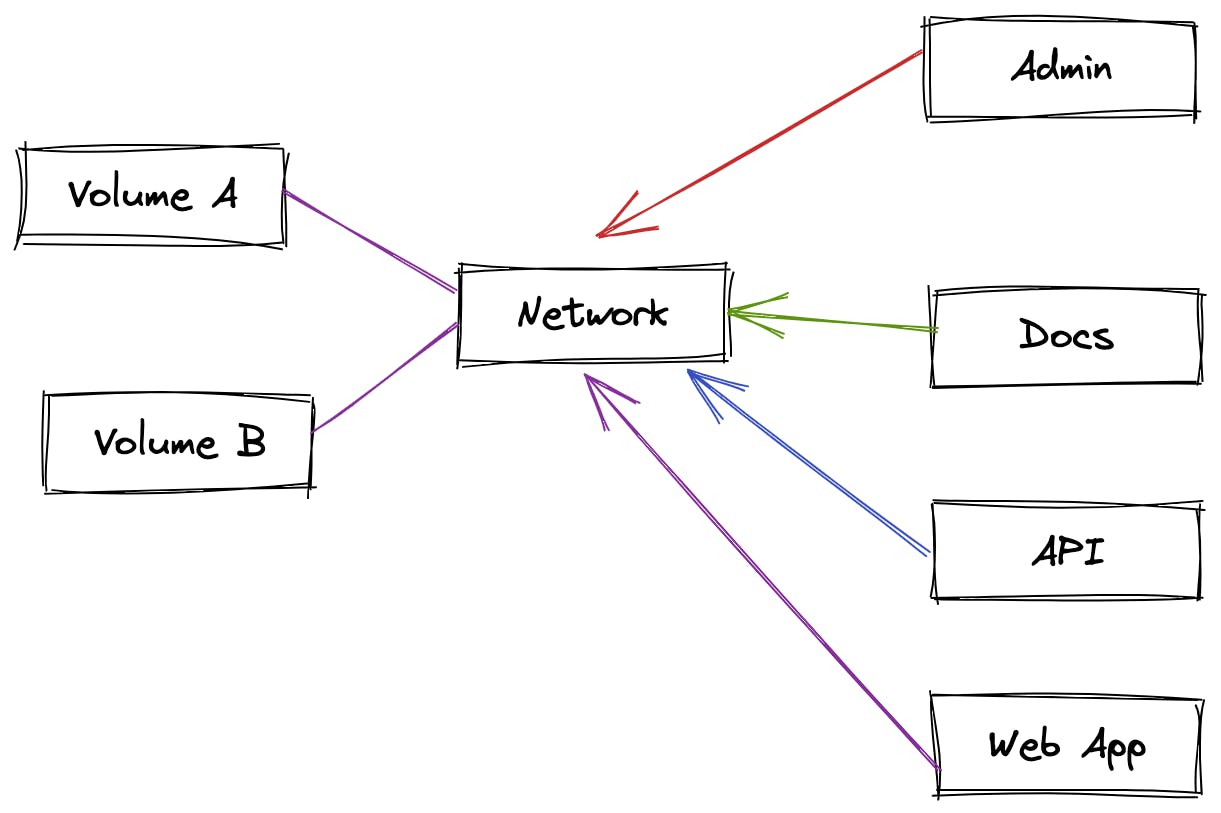

Networking

The Docker networking architecture is a crucial component of Docker’s containerization platform, acting as an abstraction layer for network communication between connected containers. It also enables containers to talk to external services, such as the host's operating system. Containers on different hosts can communicate through the network, allowing them to share resources such as files and ports.

Networking in Docker provides several benefits:

It enables a more straightforward way of provisioning applications on top of existing infrastructure rather than creating a new one from scratch.

It helps simplify development workflows by removing the need for setting up networks manually every time you deploy changes through CI/CD pipelines or via manual deployment processes.

Data Persistence

Docker containers are stateless and ephemeral, but you can make them stateful by adding a volume to the container.

Volumes

It's like an external drive or network share. It's how you mount an existing folder into your container, so you can access it from inside.

Volumes can not only be shared by the same container type between runs but also between different containers. If you have two containers and want to log to consolidate your logs into one place, volumes could help with that.

We can create volumes on a host system with different namespaces than the client applications running within them. For example, suppose you have an application running inside a container with access to some directory via a volume mounted from within the container. In that case, you can also use that same volume for external access without needing to change any files inside or outside the container itself. This makes it possible for many applications to share a single set of files without compromising security or performance due to conflicting paths between containers (e.g., one container having access to another container's volumes).

Volumes are the preferred mechanism for persisting data generated by and used by Docker containers.

Volumes allow you to create a temporary file system independent of your host filesystem, which means you can persist data even when your host machine crashes or reboots.

Volumes provide easy access to files from within a container. You can mount a volume directly into your container, allowing you to pull in files from outside of your container (e.g., from S3). This makes building robust applications that scale based on their runtime requirements easier.

Volumes allow quick removal of data once it's no longer needed so that resources aren't wasted when they aren't being used anymore (e.g., persistent volumes delete older versions of files).

Volumes can be resized as needed.

Volumes support snapshotting to create backups of your data at any time.

Containers can be started from a volume, allowing for easy debugging and testing of code without starting containers from scratch.

Conclusion

Whether you're considering using containers for your next project, or simply want to get a deeper understanding of them, having this information at your disposal will help you make the right decisions regarding containerization. Thank you for reading this article, and good luck with your project.